Intoduction Mastering the Data Pipeline Journey: From Raw Data to Actionable Insights

Data is the lifeblood of modern businesses. With vast amounts of information generated every second, harnessing this data effectively can transform raw numbers into actionable insights. But how do we navigate this complex world? Enter the data pipeline journey. This journey takes you from collecting and processing raw data to uncovering valuable patterns that drive strategic decisions.

In a landscape overflowing with information, understanding how to build an effective data pipeline isn’t just beneficial—it’s essential for staying competitive. Whether you’re a seasoned professional or just starting out, mastering this process will empower your organization to make informed choices based on real-time analytics. Let’s embark on this adventure together and unlock the true potential of your data!

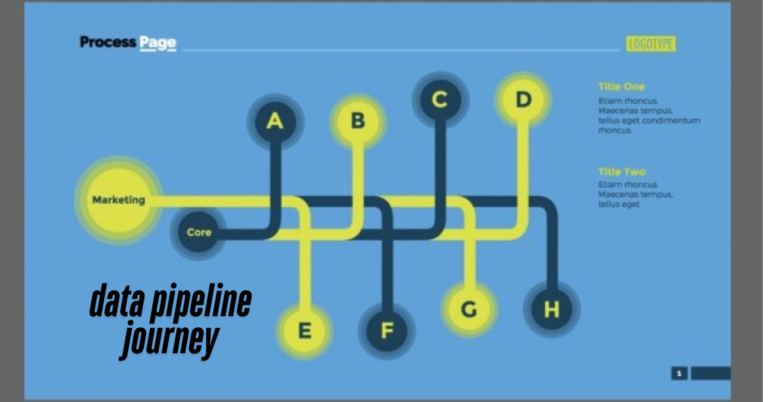

Understanding the Data Pipeline

Understanding the data pipeline begins with recognizing its fundamental purpose. It serves as a structured pathway that transforms raw data into meaningful information.

At its core, a data pipeline involves several stages: ingestion, processing, storage, and analysis. Each stage plays a crucial role in ensuring that data flows seamlessly from one point to another.

Ingestion is where it all starts. This phase collects raw data from various sources—be it databases, APIs, or real-time streams.

Once ingested, the next step is processing. Here, algorithms clean and refine the data for accuracy. It’s about filtering out noise and focusing on what’s relevant.

Storage comes next; this is where processed data resides until it’s needed for analysis or reporting. Effective storage solutions ensure quick access when insights are required.

During the analysis phase, tools visualize patterns and trends hidden within your dataset. Understanding each of these stages prepares you to build an efficient pipeline tailored to your needs.

The Importance of a Well-Designed Data Pipeline

A well-designed data pipeline acts as the backbone of any data-driven organization. It ensures smooth flow and transformation of raw data into meaningful insights.

When your pipeline is efficient, it minimizes delays and errors. This agility allows businesses to make decisions based on real-time information rather than outdated datasets.

Moreover, a robust pipeline fosters collaboration among teams. When everyone has access to clean, reliable data, it encourages innovation and informed decision-making.

Scalability is another significant advantage. As your business grows, a solid design adapts easily to increasing volumes without compromising performance.

In addition, security cannot be overlooked. A thoughtfully constructed pipeline safeguards sensitive information while maintaining compliance with regulations.

Investing in a well-structured data pipeline enhances overall productivity and drives success across various sectors.

Key Components of a Data Pipeline

A data pipeline is built on several key components that work together seamlessly. Each element plays a pivotal role in transforming raw data into valuable insights.

First, there’s the data ingestion layer. This part collects and imports data from various sources, ensuring nothing important is missed. It can handle real-time streams or batch uploads depending on your needs.

Next comes the processing stage. Here, the raw information gets cleaned and transformed. Techniques like filtering out duplicates or converting formats are crucial for maintaining quality.

Storage follows processing, where transformed data resides in databases or warehouses. Choosing the right storage solution ensures efficient retrieval when analysis begins.

Visualization tools help present findings effectively. They turn complex datasets into understandable graphics and reports tailored for decision-makers to act upon quickly.

These components combine to create a robust framework essential for any successful data pipeline journey.

Step-by-Step Guide to Building an Effective Data Pipeline

Building an effective data pipeline starts with defining your objectives. Know what insights you want to derive or problems you aim to solve.

Next, choose the right tools and technologies that fit your requirements. Consider factors like scalability, ease of use, and cost-effectiveness when making this decision.

Data ingestion comes next. Gather raw data from various sources such as databases, APIs, or streaming platforms. Ensure the process is seamless for a consistent flow.

Once ingested, focus on data transformation. Cleanse and format your data so it becomes usable for analysis. This step is crucial for maintaining accuracy in insights.

Implement monitoring solutions to track performance and detect issues in real-time. Regular audits will keep your pipeline efficient and reliable as your needs evolve over time.

Common Challenges and How to Overcome Them

Data pipelines often face significant hurdles that can impede progress. One common challenge is data quality. Inaccurate or incomplete data can lead to misleading insights. Implementing validation checks at various stages of the pipeline can help maintain high standards.

Another issue is integration complexity. With multiple data sources, ensuring seamless connectivity becomes daunting. Utilizing middleware solutions or APIs can simplify this process and enhance compatibility.

Scalability also poses a risk as businesses grow. A rigid pipeline may struggle under increased loads, resulting in performance drops. Leveraging cloud-based tools offers flexibility and scalability without major overhauls.

Security concerns shouldn’t be overlooked. Protecting sensitive information must be a priority throughout the data pipeline journey. Regular audits and robust encryption practices ensure compliance with regulations while safeguarding privacy.

Real-Life Examples of Successful Data Pipelines

Many companies have harnessed the power of effective data pipelines to drive growth and innovation. One notable example is Netflix. They use a sophisticated pipeline to gather user viewing habits, which informs content recommendations tailored to individual tastes. This not only enhances user experience but also boosts engagement.

Another intriguing case is Airbnb’s approach. They leverage real-time data from their listings and customer interactions to optimize pricing strategies dynamically. By analyzing trends in booking behaviors, they can adjust prices instantly, maximizing revenue while providing value for customers.

In retail, Amazon stands out with its extensive data pipeline that processes vast quantities of transactional and behavioral data daily. This enables them to streamline inventory management and predict consumer demand accurately.

These examples illustrate how diverse industries utilize well-structured data pipelines, transforming raw information into strategic advantages that propel business success forward.

Conclusion: Harnessing the Power of Data for Business Success

Harnessing the power of data is essential for any business aiming to thrive in today’s competitive landscape. A well-structured data pipeline journey transforms raw information into valuable insights that drive decision-making and strategy.

By understanding each stage—from collection through processing and analysis—organizations can ensure they are leveraging their data effectively. The importance of a robust pipeline cannot be overstated; it serves as the backbone for analytical endeavors.

When companies invest in key components, such as quality tools and technologies, they pave the way for success. By addressing common obstacles head-on, businesses can streamline their processes, mitigate risks, and enhance performance.

Real-life examples showcase how successful organizations have optimized their data pipelines to achieve remarkable results. These stories inspire us all to take action on our own journeys.

As you embark on your data pipeline journey, remember: every step taken toward clarity and insight brings you closer to unlocking your business’s full potential.

FAQS1. What is the “Data Pipeline Journey”?

The data pipeline journey refers to the process of collecting, processing, storing, and analyzing raw data to transform it into actionable insights. This journey helps organizations unlock the value hidden in their data, enabling better decision-making and business strategies.

2. Why is a well-designed data pipeline important for businesses?

A well-designed data pipeline ensures smooth data flow, minimizes errors, and provides timely insights. It enhances productivity, fosters collaboration, scales with business growth, and maintains security, making it essential for data-driven decision-making.

3. What are the main stages of a data pipeline?

The key stages include data ingestion (collecting data from various sources), processing (cleaning and transforming data), storage (organizing data for easy access), and analysis (extracting valuable insights for decision-making).

4. What tools are needed to build a successful data pipeline?

To build an effective data pipeline, businesses need tools for data ingestion, processing, storage, and visualization. The right tools should be scalable, cost-effective, and capable of handling large data volumes seamlessly.

5. How can companies overcome common data pipeline challenges?

Companies can address challenges like data quality, integration complexity, scalability, and security by using validation checks, leveraging middleware solutions, opting for cloud-based tools, and ensuring robust encryption and audits throughout the pipeline.